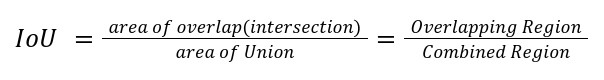

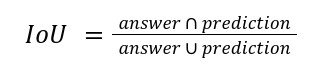

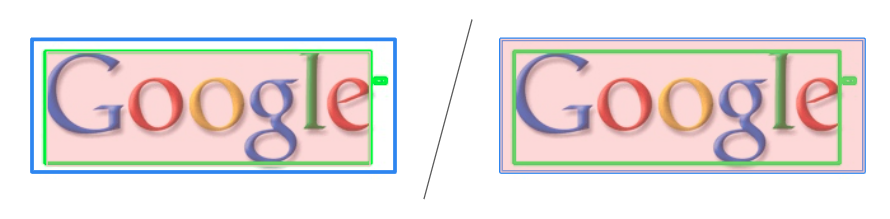

IoU = Intersection over Union

- Object Detection 모델에 대한 answer(annotation) 영역과 output(prediction) 영역 사이의 겹치는 정도를 파악하여, 이를 통해 정확도를 평가하기 위해 IoU를 측정한다.

annotation의 bounding box 위치와 모델이 검출한 bounding box의 위치가 동일한가?

쉽게 생각해서 겹치는 부분 / 전체 부분이다.

IoU에 대한 threshold를 설정하여 50%이상이면 정답, 이하이면 오답으로 분류하여 정확도를 판단할 수 있다.

흔하게는 50%~80% 사이로 임계값을 설정한다.

image_path = "img_1.jpg"

gt_path = "gt_img_1.txt"

pred_path = "img_1_text_detection.txt"

def get_bbox(path):

with open(path, 'r') as file:

bbox = [list(map(int, line.strip().split(",")[:8])) for line in file.readlines()]

return bbox

annotations = get_bbox(gt_path)

predictions = get_bbox(pred_path)

print("annotations : ")

print(annotations)

print("-"*50)

print("Predictions : ")

print(predictions)

##############Results################

#annotations :

#[[178, 11, 209, 11, 209, 25, 178, 25], [215, 11, 249, 11, 249, 24, 215, 24], [256, 14, 297, 14, 297, 22, 256, 22], [317, 7, 379, 7, 379, 28, 317, 28], [61, 64, 91, 64, 91, 76, 61, 76], [97, 64, 150, 64, 150, 79, 97, 79], [61, 82, 146, 82, 146, 95, 61, 95], [61, 101, 108, 101, 108, 113, 61, 113], [62, 119, 108, 119, 108, 132, 62, 132], [62, 140, 149, 140, 149, 174, 62, 174], [322, 413, 349, 413, 349, 421, 322, 421], [354, 413, 380, 413, 380, 421, 354, 421]]

#--------------------------------------------------

#Predictions :

#[[316, 4, 382, 10, 381, 30, 314, 25], [175, 9, 299, 9, 299, 26, 175, 26], [60, 62, 152, 62, 152, 80, 60, 80], [59, 80, 148, 80, 148, 97, 59, 97], [59, 99, 111, 99, 111, 116, 59, 116], [59, 118, 110, 118, 110, 134, 59, 134], [60, 139, 149, 137, 150, 175, 61, 177], [321, 412, 382, 412, 382, 423, 321, 423]]#preprocessing

def get_points(bbox):

xs = [x for k, x in enumerate(bbox) if k%2==0]

ys = [y for k, y in enumerate(bbox) if k%2==1]

return min(xs), min(ys), max(xs), max(ys)

annotations = [get_points(box) for box in annotations]

predictions = [get_points(box) for box in predictions]

print("annotations : ")

print(annotations)

print("-"*50)

print("Predictions : ")

print(predictions)

annotations :

[(178, 11, 209, 25), (215, 11, 249, 24), (256, 14, 297, 22), (317, 7, 379, 28), (61, 64, 91, 76), (97, 64, 150, 79), (61, 82, 146, 95), (61, 101, 108, 113), (62, 119, 108, 132), (62, 140, 149, 174), (322, 413, 349, 421), (354, 413, 380, 421)]

--------------------------------------------------

Predictions :

[(314, 4, 382, 30), (175, 9, 299, 26), (60, 62, 152, 80), (59, 80, 148, 97), (59, 99, 111, 116), (59, 118, 110, 134), (60, 137, 150, 177), (321, 412, 382, 423)]

import cv2

import numpy as np

import copy

def calculate_acc(ans_mask, out_mask):

# area of intersection

intersection = cv2.bitwise_and(ans_mask, out_mask)

intersection_count = np.count_nonzero(intersection)

# area of union

union = cv2.bitwise_or(ans_mask, out_mask)

union_count = np.count_nonzero(union)

# Calculate IoU

if union_count == 0:

iou = 0

else:

iou = int(intersection_count / union_count * 100)

return iou

def overlap(gt, out):

# gt : ground_truth (answer)

# out : output (prediction)

overlap_1 = not (out[2] < gt[0] or out[3] < gt[1])

overlap_2 = not (out[0] > gt[2] or out[1] > gt[3])

return overlap_1 and overlap_2

width, height = cv2.imread(image_path)[:, :, 0].shape

# to fill True value into bbox area

mask = np.zeros((width, height), dtype=np.uint8)

threshold = 80

for i, gt in enumerate(annotations):

print(f"{i}번째 bbox : {gt}")

ans_mask = copy.deepcopy(mask)

out_mask = copy.deepcopy(mask)

cv2.rectangle(ans_mask, gt[0:2], gt[2:4], (255, 255), cv2.FILLED)

ans_mask = cv2.bitwise_or(mask, ans_mask, mask=ans_mask)

for j, out in enumerate(predictions):

if overlap(gt, out):

print(f"out : {out}")

cv2.rectangle(out_mask, out[0:2], out[2:4], (255,255), cv2.FILLED)

out_mask = cv2.bitwise_or(mask, out_mask, mask=out_mask)

# predictions.pop(j)

break

else:

continue

# calculate iou

iou = calculate_acc(ans_mask, out_mask)

# thresholding for answer (True or False)

if iou > threshold:

print("True")

else:

print("False")

여기에서 주의할 점이 IoU처럼 Text Detection model을 평가할 때는 해당 bounding box가 character-level인지, word-level인지, sentence-level인지를 파악하고, 같은 level끼리 IoU를 산출해주어야 한다. 레벨이 다르다면 점수의 신뢰도가 낮아지고, 정확한 비교가 어려워지기 때문이다.

'AI > Computer Vision' 카테고리의 다른 글

| Image Segmentation이란? Image Matting과의 차이 (0) | 2024.05.21 |

|---|---|

| 이미지 영상의 어파인 변환 (Affine Transformation)이란 무엇인가? (0) | 2023.11.17 |

| Spectral Clustering 알고리즘 & Laplacian Matrix. 라플라시안 행렬 (그래프이론) (0) | 2023.11.01 |

| Digital Image Processing 이란 무엇일까? (디지털 이미지 처리) (0) | 2023.04.15 |

| [트렌드] Computer Vision에서의 생성형 AI. Generative model에 대해서 알아보자. (0) | 2023.04.14 |